Summary

Are digital images submitted as court evidence genuine or have the pictures been altered or modified? We developed a range of algorithms performing automated authenticity analysis of JPEG images, and implemented them into a commercially available forensic tool. The tool produces a concise estimate of the image’s authenticity, and clearly displays the probability of the image being forged. This paper discusses methods, tools and approaches used to detect the various signs of manipulation with digital images.

How many kittens

are sitting on the street? If you thought “four”, read along to find out!

Introduction

Today, almost everyone has a digital camera. Literally billions of digital images were taken. Some of these images are used for purposes other than family photo albums or Web site decoration.

On the rise of digital photography, manufacturers of graphic editing tools quickly catch up momentum. The tools are becoming cheaper and easier to use – so easy in fact that anyone can use them to enhance their images. Editing or post-processing, if done properly, can greatly enhance the appearance of the picture, increase its impact to the viewer and better convey the artist’s message. But where is the point when a documentary photograph becomes fictional work of art?

While for most purposes editing pictures is more than okay, certain types of photographs are never to be manipulated. Digital pictures are routinely handed to news editors as part of event coverage. Digital pictures are presented to courts as evidence. For news coverage, certain types of alterations or modifications (such as cropping, straightening verticals, adjusting colors and gamma etc.) may or may not be acceptable. Images presented as court evidence must not be manipulated in any way; otherwise they lose credibility as acceptable evidence.

Today’s powerful graphical editors and sophisticated image manipulation techniques make it extremely easy to modify original images in such a way that any alterations are impossible to catch by an untrained eye, and can even escape the scrutiny of experienced editors of reputable news media. Even the eye of a highly competent forensic expert can miss certain signs of a fake, potentially allowing forged (altered) images to be accepted as court evidence.

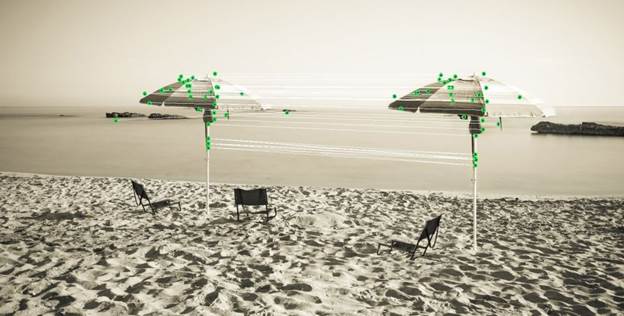

How many umbrellas? Read along to find out!

Major camera manufacturers attempted to address the issue by introducing systems based on secure digital certificates. The purpose of these systems was the ability to prove that images were not altered after being captured by the camera. Obviously aimed at photo journalists and editors, this system was also used in legal cases as genuine court evidence. The approach looks terrific on paper. The only problem, it does not work. A Russian company was able to easily forge images signed by a Canon and then Nikon digital cameras. The obviously faked images successfully passed the authenticity test by the respective manufacturers’ verification software.

Which brings us to the question. If human experts are having a hard time determining whether a particular image was altered, and if existing certificate-based authenticity verification systems cannot be relied upon, should we just give up on the very issue?

This paper demonstrates a new probabilistic approach allowing automatic authenticity analysis of a digital image. The solution uses multiple algorithms analyzing different aspects of the digital image, and employs a neural network to produce an estimate of the image’s authenticity, or providing the probability of the image being forged.

1. What Is a Forged Image?

What constitutes a manipulated image? For the purpose of this paper, we consider any modification, alteration or “enhancement” of the image after the image left the camera made with any software, including RAW conversion tools to constitute an altered image.

That said, we don’t consider an image to be altered if only in-camera, internal conversions, filters and corrections such as certain aberration corrections, saturation boost, shadow and highlight enhancements and sharpening are applied. After all, the processing of raw pixel data captured from the digital sensor is exactly what the camera’s processor is supposed to be doing.

But is every altered image a forged one? What if the only things done to the image were standard and widely accepted techniques such as cropping, rotating or applying horizon correction? These and some other techniques do alter the image, but don’t necessarily forge it, and this point may be brought before the editor or a judge, making them accept an altered image as genuine [1]. Therefore, the whole point of forgery analysis is determining whether any changes were made to alter meaningful content of the image. So we’ll analyze an image on pixel level in order to detect whether significant changes were made to the actual pixels, altering the content of the image rather than its appearance on the screen.

Considering all of the above, it’s pretty obvious that no single algorithm can be used to reliably detect content alterations. In our solution, we are using multiple algorithms which, in turn, fall in one of the two major groups: pixel-level content analysis algorithms locating modified areas within the image, and algorithms analyzing image format specifications to determine whether or not certain corrections have been applied to the image after it left the camera.

In addition, certain methods we had high hopes for turned out to be not applicable (e.g. block artifact grid detection). We’ll discuss those methods and the reasons why they cannot be used.

2. Forgery Detection Algorithms

Providing a comprehensive description of each and every algorithm used for detecting forged images would not be feasible, and would be out of scope of this paper. We will describe five major techniques used in our solution to feed the decisive neural network (the description of which is also out of scope of this paper).

The algorithms made it into a working prototype, and then to commercial implementation. At this time, forgery detection techniques are used in the Photo Forgery Detection module, an extension of a forensic tool Belkasoft Evidence Center. The module can analyze images discovered with Belkasoft Evidence Center, and provide the probability of the image being manipulated (forged).

2.1. JPEG Format Analysis

JPEG is a de-facto standard in digital photography. Most digital cameras can produce JPEGs, and many can only produce files in JPEG format.

The JPEG format is an endless source of data that can be used for the purposes of detecting forged images. The JPEG Format Analysis algorithm makes use of information stored in the many technical meta-tags available in the beginning of each JPEG file. These tags contain information about quantization matrixes, Huffman code tables, chroma subsampling, and many other parameters as well as a miniature version (thumbnail) of the full image. The content and sequence of those tags, as well as which particular tags are available, depend on the image itself as well as the device that captured it or software that modified it.

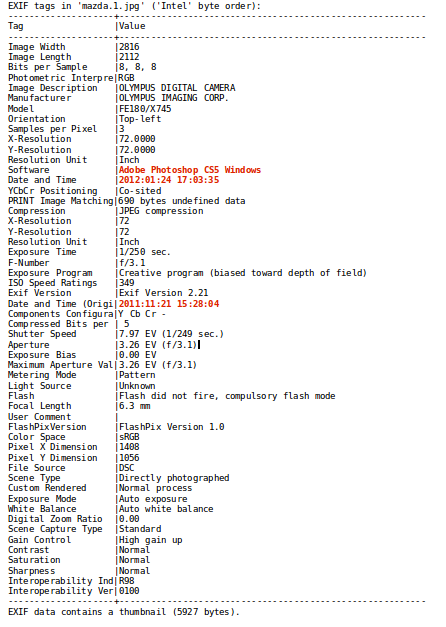

In addition to technical information, JPEG tags contain important information about the photo including shooting conditions and parameters such as ambient light levels, aperture and shutter speed information, make and model of the camera and lens the image was taken with, lens focal length, whether or not flash was being used, color profile information, and so on and so forth.

The basic analysis method verifies the validity of EXIF tags in the first place in an attempt to find discrepancies. This, for example, may include checks for EXIF tags added in post-processing by certain editing tools, checks for capturing date vs. the date of last modification, and so on. However, EXIF tags can be easily forged; so easily in fact that while we can treat existing EXIF discrepancies as a positive sign of an image being altered, the fact that the tags are “in order” does not bring any meaningful information.

Our solution makes an attempt to discover discrepancies between the actual image and available EXIF information, comparing the actual EXIF tags against tags that are typically used by a certain device (one that’s specified as a capturing device in the corresponding EXIF tag). We collected a comprehensive database of EXIF tags produced by a wide range of digital cameras including many smartphone models. We’re also actively adding information about new models as soon as they become available.

In addition to EXIF analysis, we review quantization tables in all image channels. Most digital cameras feature a limited set of quantization tables; therefore, we can discover discrepancies by comparing hash tables of the actual image against those expected to be produced by a certain camera.

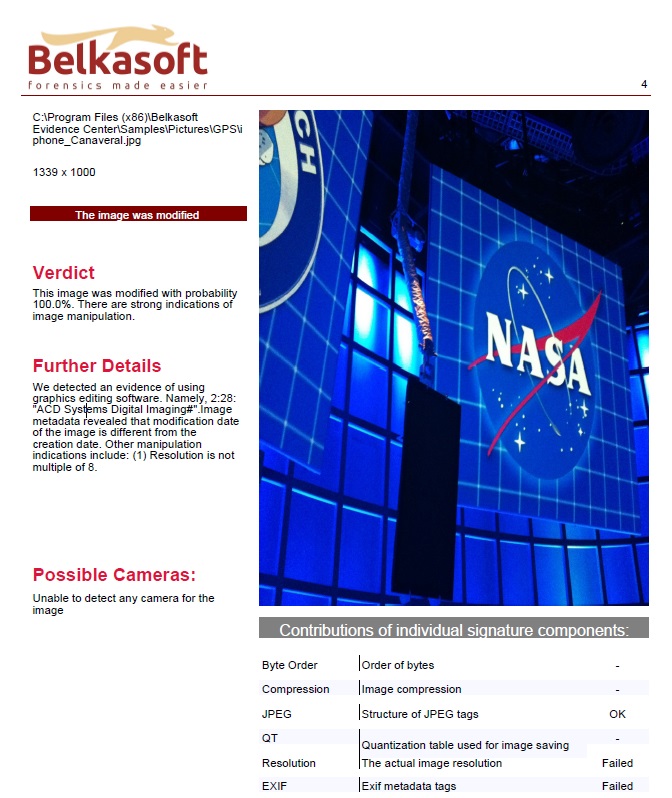

EXIF tags of this image are a clear indication of image manipulation. The “Software” tag displays software used for editing the image, and the original date and time does not match last modification date and time.

2.2. Double Quantization Effect

This algorithm is based on certain quantization artifacts appearing when applying JPEG compression more than once. If a JPEG file was opened, edited, then saved, certain compression artifacts will inevitably appear.

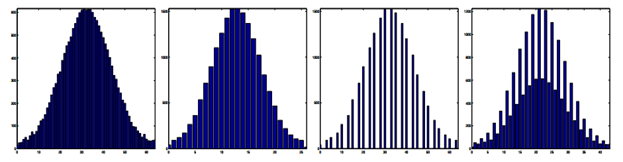

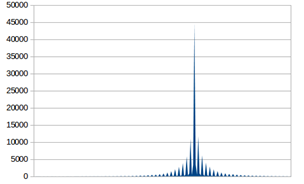

In order to determine the double quantization effect, the algorithm creates 192 histograms containing discrete cosine transform values. Certain quantization effects will only appear on these histograms if an image was saved in JPEG format more than once. If the effect is discovered, we can definitely tell the image was edited (or at least saved by a graphic editor) at least once. However, if this effect is not discovered, we cannot make any definite conclusions about the image as it could, for example, be developed from a RAW file, edited in a graphic editor and saved to a JPEG file just once.

The first two histograms represent a typical file that was only saved once. The other two demonstrate what happens to a JPEG image if it’s opened and saved as JPEG once again.

These two images look identical, although the second picture was opened in a graphic editor and then saved.

The following histograms make the difference clear.

2.3. Error Level Analysis

This algorithm detects foreign objects injected into the original image by analyzing quantization tables of blocks of pixels across the image. Quantization of certain pasted objects (as well as objects drawn in an editor) may differ significantly from other parts of the image, especially if either (or both) the original image or injected objects were previously compressed in JPEG format.

While this may not be a perfect example, it still makes it very clear which of the four cats were originally in the images, and which were pasted during editing. Quantization deviation is significantly higher for the two cats on the left. This effect will be significantly more pronounced if the object being pasted would be taken from a different image.

2.4. Copy/Move Forgery and Clone Detection

An extremely common practice of faking images is transplanting parts of the same image across the picture. For example, an editor may mask the existence of a certain object by “patching” it with a piece of background cloned from that same image, copy or move existing objects around the picture. Quantization tables of the different pieces will look very similar to the rest of the image, so we must employ methods identifying image blocks that look artificially similar to each other.

|

|

|

|

|

|

The second image is fake. Note that the other umbrella is not simply copying and pasting: the pasted object is scaled to appear larger (closer). The third image outlines matching points that allow detecting the cloned image.

Our solution employs several approaches including direct tile comparison across the image, as well as complex algorithms that are able to identify cloned areas even if varying transparency levels are applied to pasted pieces, or if an object is placed on top of the pasted area.

2.5. Inconsistent Image Quality

JPEG is a lossy format. Every time the same image is opened and saved in the JPEG format, some apparent visual quality is lost and some artifacts appear. You can easily reproduce the issue by opening a JPEG file, saving it, closing, then opening and saving again. Repeat several times, and you’ll start noticing the difference; sooner if higher compression levels are specified.

Visual quality is not standardized, and varies greatly between the different JPEG compression engines. Different JPEG compression algorithms may produce vastly different files even when set to their highest-quality setting. As there is no uniform standard among the different JPEG implementations to justify resulting visual quality of a JPEG file, we had to settle on our own internal scale. This was inevitable to judge the quality of JPEG files processed by the many different engines on the same scale.

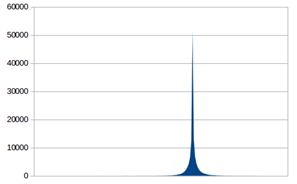

This is the same image, only the last three pictures are saved from the original with 90%, 70% and 50% quality respectively. The higher the level of compression is the more visible blocking artifacts become. JPEG is using blocks sized 8x8 pixels, and these blocks become more and more clearly visible when the image is re-saved.

According to our internal scale, JPEG images coming out of the camera normally have apparent visual quality of roughly 80% (can be more or less, depending on camera settings and JPEG compression engine employed by the camera processor). As a result, we expect an unaltered image to fall approximately within that range. However, as JPEG is a lossy compression algorithm, every time a JPEG image is opened and saved as a JPEG file again, there is loss of apparent visual quality – even if the lowest compression / highest quality setting is used.

The simplest way to estimate the apparent visual quality of an existing JPEG file would be applying certain formulas to channel quantization tables specified in the file’s tags. However, altering the tags is all too easy, so our solution uses pixel-level analysis that can “see through” the quantization matrix.

3. Non-Applicable Algorithms

Some techniques sound great on paper but don’t work that well (if at all) in real life. The algorithms described below may be used in lab tests performed under controlled circumstances, but stand no chance in real life applications.

3.1. Block artifact grid detection

The idea is also based on ideas presented in [2] and [3]. However, the algorithm analyzes the result of discrete cosine transform coefficients calculated on a bunch of 8x8 JPEG DCT chunks. Comparing coefficients to one another can supposedly identify foreign objects such as those pasted from another image. In reality these changes turned out to be statistically insignificant and easily affected by consecutive compression when saving the final JPEG image. In addition, discrepancies can easily arise in the original image on the borders of different color zones.

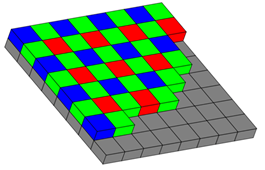

3.2. Color filter array interpolation

Most modern digital sensors are based on the Bayer array.

This algorithm makes use of the fact that most modern digital cameras are using sensors based on a Bayer array. Pixel values of color images are determined by interpolating readings of adjacent red, green and blue sub-pixels [4].

Based on this fact, a statistical comparison of adjacent blocks of pixels can supposedly identify discrepancies. In reality, we discovered no statistically meaningful differences, especially if an image was compressed and re-compressed with a lossy algorithm such as JPEG. This method would probably give somewhat more meaningful results if lossless compression formats such as TIFF were widely used. In real-life applications, the lossy JPEG format is a de-facto standard for storing digital pictures, so color filter array interpolation algorithm is of little use in these applications.

4. Implementation

The algorithms described in this paper made it to a commercial product. They were implemented as a module to a forensic tool Belkasoft Evidence Center. The Photo Forgery Detection module enables Evidence Center to estimate how genuine the images are by calculating the probability of alterations. The product is aimed at digital forensic audience, allowing investigators, lawyers and law enforcement officials validate whether digital pictures submitted as evidence are in fact acceptable.

Using Evidence Center equipped with the Photo Forgery Detection module to analyze authenticity of digital images is easy. The analysis is completely automated. You can find more details at https://belkasoft.com/forgery-detection.

4.1. Using Forgery Detection

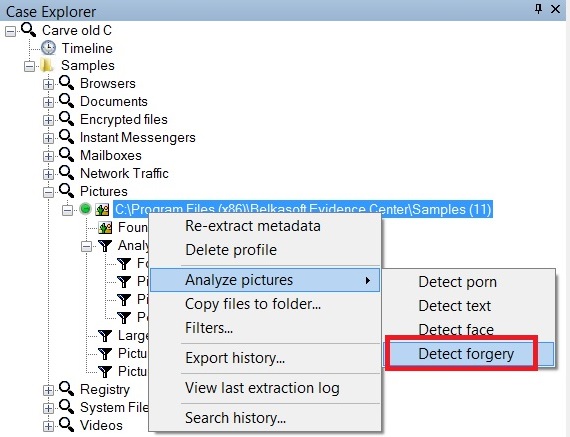

In order to detect forged pictures, select image profile of interest, right-click on it, choose Analyze pictures context menu item and then Detect forgery. The analysis will start.

After the analysis the filter Forged pictures under Analysis results profile subnode in Case Explorer will be filled with the pictures with forgery detected. The total number of forged pictures will be indicated in the filter node.

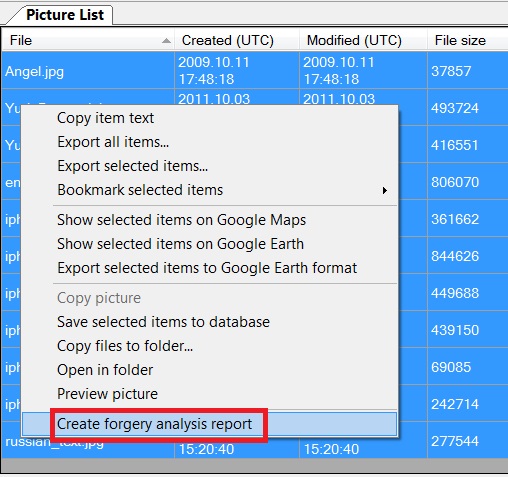

After the analysis you can create a special Forgery detection report. In order to do so, select pictures of interest, right click and choose Create forgery analysis report:

The forgery analysis report looks like below:

5. Conclusion and Further Work

We developed a comprehensive software solution implementing algorithms based on statistical analysis of information available in digital images. A neural network is employed to produce the final decision, judging the probability of an image of being altered or original. Some algorithms employed in our solution are based on encoding and compression techniques as well as compression artifacts inherent to the de-facto standard JPEG algorithm. Most alterations performed to JPEG files are spotted right away with high probability.

Notably, our solution in its current state may miss certain alterations performed on uncompressed images or pictures compressed with a lossless codec.

Let us take, for example, scenario in which an editor pastes slices from one RAW (TIFF, PNG…) image file into another losslessly compressed file, and then saves a final JPEG only once. In this case, our solution will be able to tell that the image was in fact modified in some graphic editing software, but will be likely unable to detect the exact location of foreign objects. However, if the pasted bits were taken from a JPEG file (which is rather likely as most pictures today are in fact stored as JPEGs), then our solution will likely be able to pinpoint the exact location of the patches.

6. About the Authors

|

|

Alexey Kuznetsov is the Head of Department of GRC (Governance Risk Complience) in International Banking Institute. Alexey is an expert on business process modeling. |

|

Yakov Severyukhin is Head of Photoreport Analysis Laboratory in International Banking Institute. Yakov is an expert in digital image processing. |

|

Oleg Afonin is Belkasoft sales and marketing director. He is an expert and consultant in computer forensics. |

|

Yuri Gubanov is a CEO of Belkasoft and digital forensics expert. Yuri is a frequent speaker at industry-known conferences such as CEIC, HTCIA, FT-Day, ICDDF, TechnoForensics and others. |

The authors can be contacted by email at research@belkasoft.com

7. References

- Protecting Journalistic Integrity Algorithmically

http://lemonodor.com/archives/2008/02/protecting_journalistic_integrity_algorithmically.html#c22564

- Detection of Copy-Move Forgery in Digital Images http://www.ws.binghamton.edu/fridrich/Research/copymove.pdf

- John Graham: Cumming’s Clone Tool Detector

http://www.jgc.org/blog/2008/02/tonight-im-going-to-write-myself-aston.html

- Demosaicking: Color Filter Array Interpolation

http://www.ece.gatech.edu/research/labs/MCCL/pubs/dwnlds/bahadir05.pdf

- Retrieving Digital Evidence: Methods, Techniques and Issues retrieving-digital-evidence-methods-techniques-and-issues