AI in Digital Forensics: Ethical Considerations

Artificial Intelligence (AI) and Machine Learning (ML) are becoming increasingly common in Digital Forensics and Incident Response (DFIR). Examiners in law enforcement use AI to automate tasks such as facial recognition, detection, and categorization of pictures with specific content, speech-to-text conversion, and text data analysis, while corporate security teams increasingly employ AI for anomaly detection and log analysis. These tools can accelerate workflows and help manage the growing volume of digital evidence. However, introducing AI into DFIR also raises ethical concerns that must be addressed carefully.

In this article, we are going to tackle several key ethical considerations that DFIR professionals must take into account when using AI tools.

Why ethics matter in AI-driven DFIR

AI does more than just process data: it handles sensitive information, shapes legal outcomes, and affects people’s lives. On top of this,AI is still an evolving technology, so its automated results should be used with caution. Many AI systems function as “black boxes,” meaning it is often unclear how they reach their conclusions. An ethical approach to using AI tools is not optional, it is part of doing the job right, especially in forensics.

Let us break down the specific areas where ethical risks often arise and how you can handle them.

Protecting privacy and personal data

When working with sensitive information like private messages, photos, or location records, DFIR specialists must ensure that their use of AI aligns with relevant privacy laws, such as the General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), or the International Organization for Standardization (ISO) 29100, depending on jurisdiction and context. Ethical data handling practices include:

- Only process what matters: Follow data minimization principles to avoid collecting and processing unnecessary data. Such an approach involves being selective about what you feed your AI tools, focusing only on relevant information for the case at hand.

- Secure your environment: Personally identifiable information (PII) should never leave secure storage or run in an untrusted environment.

- Check your tools’ policies: If you use third-party AI, especially cloud-based, review the provider’s terms. Some retain access to user data, which may conflict with your obligations under GDPR or CCPA, or other regulations.

Privacy is more than compliance—it is about respecting the people behind the data.

The issue of AI bias

It would seem that bias is not directly relevant to Digital Forensics and Incident Response (DFIR), but its impact on fairness in how suspects or victims are treated can be significant. The use of AI models that reflect or amplify biases in their training data can lead to misclassifications of evidence or missed findings in forensic investigations, as seen in the following examples:

- Evidence is mislabeled: AI might falsely flag evidence based on its bias, leading to wrongful accusations. For instance, an image recognition tool trained mostly on pictures of individuals from one demographic might mistakenly identify people from another demographic as criminals due to underrepresentation.

- Important evidence is ignored: Biases can cause AI tools to overlook or downplay certain types of evidence.

- Low-priority labels for serious cases: Similarly, AI tools used to sort incoming cases might give lower priority to certain types, simply because those types were underrepresented in the training data. As a result, investigators may miss urgent leads.

To reduce bias in your DFIR tools:

- Verify results: Always review AI-generated findings critically, especially when they could significantly impact an investigation. Human judgment remains crucial in DFIR workflows.

- Compare with known cases: Periodically test the tool on past cases where you already know the outcome. Look for signs that the AI might have missed something or handled the case differently than it should have.

- Train with varied examples: This advice is primarily for organizations developing AI tools in-house, like large law enforcement agencies or well-resourced private sector firms with dedicated DFIR teams. Training data should reflect a wide range of scenarios to mitigate bias.

Bias is not just a technical issue, however, it can lead to wrongful accusations or missed evidence. Treat it like any other risk in your workflow.

Staying transparent

Transparency means documenting what your tools did, how they did it, and how you used the results. Without this, you will not be able to explain your results in court or trust them. Maintain good habits:

- Document every step: Track which tool version you used, when, and on what data.

- Flag automated outputs: Always mark what was AI-generated versus human-reviewed.

- Keep control: The analyst, not the machine, should decide what counts as evidence.

This clarity will not just help your team, it will also help in court testimony or internal investigations and will support your accountability.

Legal and regulatory hurdles of AI

AI-generated evidence must meet the same legal standards as any other part of your investigation, which implies that any findings produced using AI must be admissible in court.

In the United States, this involves meeting the standards of Frye or Daubert, which assess the scientific validity and general acceptance of a study method. In the European Union, the proposed AI Act introduces restrictions on automated tools, especially those involving biometric data or risk scoring.

To make AI findings acceptable as evidence:

- Maintain the chain of custody: You need to be able to show where the information came from and how it was handled throughout the process.

- Document your methodology: Describe how the AI worked and what limitations it had, which will help others understand your AI-driven conclusions.

- Study your toolset: Familiarise yourself with the internal logic of your AI tool so you can explain it in court if necessary.

- Stay up-to-date with changing laws: New regulations, like the proposed EU AI Act, might restrict certain uses of AI in areas like facial recognition.

Stay informed. Legal frameworks are evolving, and so should your practices.

Building ethical AI into daily practice

Ethical use of AI is not just about rules—it is about how you work every day. These best practices help you maintain control, protect your team, and keep your results trustworthy.

Keep human oversight (Overcoming automation bias)

AI should assist your decisions, not make them for you. While AI-generated output can be very compelling, keep in mind that the more you rely on it, the more careful you need to be.

- Review key outputs manually. AI-generated results include keyword hits, flagged images, scoring results, and LLM analysis output.

- Understand context. Do not treat data points in isolation. Ask what the full picture tells you.

- Train your team. Analysts should know how each AI tool works, when it can go wrong, and what its outputs mean.

Automation can be very useful, but only if you understand its limitations and know when to rely on your own judgment.

Secure your tools

The integrity of your AI tools matters as much as the integrity and credibility of physical evidence. A compromised tool can lead to flawed findings or lost case data. To protect your AI tools and case data:

- Run AI in isolated environments. Do not use AI tools that send case data across the internet. If you must rely on cloud-based models, use strong encryption, verify vendor security measures, and ensure there are clear legal safeguards in place.

- Control updates: Never deploy new versions without testing. Check each update, whether it is a model, library, or full tool release, in a controlled environment. Confirm that it works as expected and introduces no errors or vulnerabilities.

- Audit software regularly: Keep a detailed inventory of tool versions used in each case. Run security scans and review key components for tampering or weak spots that might impact reliability.

Your AI tools are part of your investigative chain. Their integrity must be treated with the same care as evidence handling.

Do not forget the human side

AI systems can reduce exposure to disturbing content by automatically filtering or flagging it, which helps reduce the psychological toll on DFIR examiners. At the same time, you can rely on AI tools to sift through digital noise. However, complete reliance on AI to shield analysts can backfire. Without manual review, there is a risk of misunderstanding the context of the case or failing to recognize new, uncategorized material. To maintain such a balance, you need to:

- Use filters wisely: Implement AI filters as a preliminary tool for sorting and prioritizing evidence, rather than relying solely on their conclusions.

- Avoid dehumanization: Victims and suspects are individuals with inherent value, not mere data points. Analysts must remain aware of the human impact of their work and avoid becoming overly reliant on automation.

- Build ethics into training: Educational resources such as webinars, certifications, and policies should incorporate guidance on AI ethics to ensure that analysts are equipped to navigate complex moral dilemmas.

By adopting these strategies, you can effectively harness the power of AI while maintaining an understanding of its capabilities and limitations.

Belkasoft’s approach to responsible AI

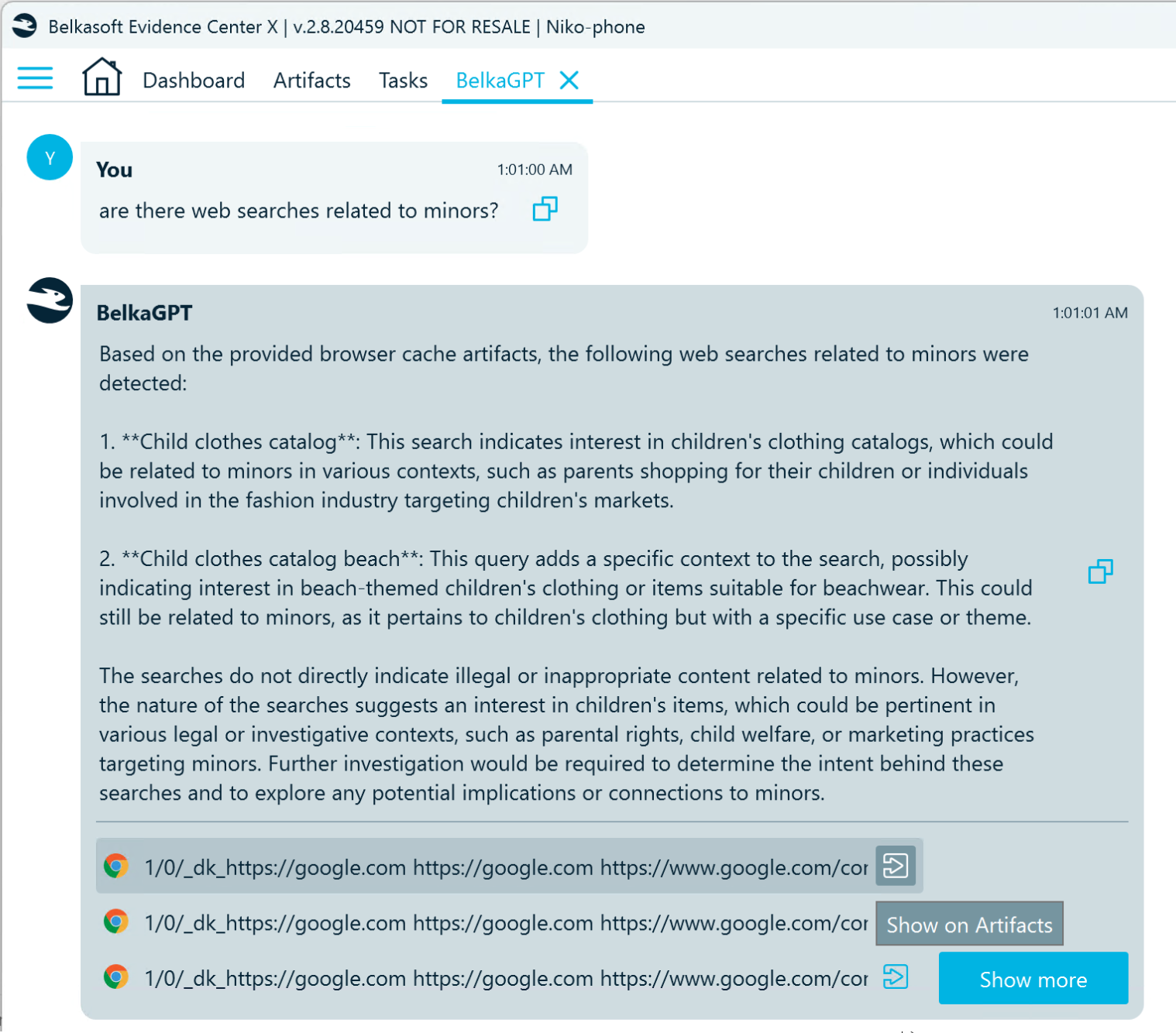

Belkasoft supports the responsible and transparent use of AI in digital forensics. In Belkasoft X, AI-driven features are always paired with direct access to the original artifacts. The star of the show—our AI assistant, BelkaGPT, adheres to the following principles:

- Forensically sound data handling: All data is acquired and stored in a forensically sound manner, preserving the integrity of the evidence from the outset and forming a reliable basis for any subsequent AI analysis.

- Offline data processing: All data handling occurs offline to minimize the risk of data loss or corruption. Secure storage ensures that evidence remains protected throughout the investigative process.

- Transparency in automatic findings: No AI-generated finding is considered final without explicit validation by a human examiner, ensuring that the nuanced understanding and contextual awareness of the investigator remain paramount in the decision-making process.

This approach enables investigators to verify AI’s findings, to explain to the court how they were obtained, and to demonstrate that decisions were not made by machines alone.

Final Thoughts

Using AI in DFIR is not just about faster results. It is about doing the job responsibly. That means protecting privacy, managing bias, staying transparent, and never letting the machine take over the role of the analyst.

Build these principles into your workflow. Update them as laws and tools evolve. And above all, stay in control.